It is a decision-tree-based ensemble Machine Learning algorithm that uses a gradient boosting framework. An Information system is a combination of hardware and software and telecommunication networks that people build to collect create and distribute useful data typically in an organization.

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

The objective of an information system is to provide appropriate information to the user to gather the data.

. It was introduced by Tianqi Chen and is currently a part of a wider toolkit by DMLC Distributed Machine Learning Community. In this post you will discover the effect of the learning rate in gradient boosting and how to. Hyperparameters and configurations used for building the model.

It defines the flow of information within the system. Until now it is the same as the gradient boosting technique. One effective way to slow down learning in the gradient boosting model is to use a learning rate also called shrinkage or eta in XGBoost documentation.

For XGboost some new terms are introduced ƛ - regularization parameter Ɣ - for auto tree pruning eta - how much model will converge. A good understanding of gradient boosting will be beneficial as we progress. The three algorithms in scope CatBoost XGBoost and LightGBM are all variants of gradient boosting algorithms.

XGBoost eXtreme Gradient Boosting is a machine learning algorithm that focuses on computation speed and model performance. Before we get started XGBoost is a gradient boosting library with focus on tree model which means inside XGBoost there are 2 distinct parts. The algorithm can be used for both regression and classification tasks and has been designed to work with large.

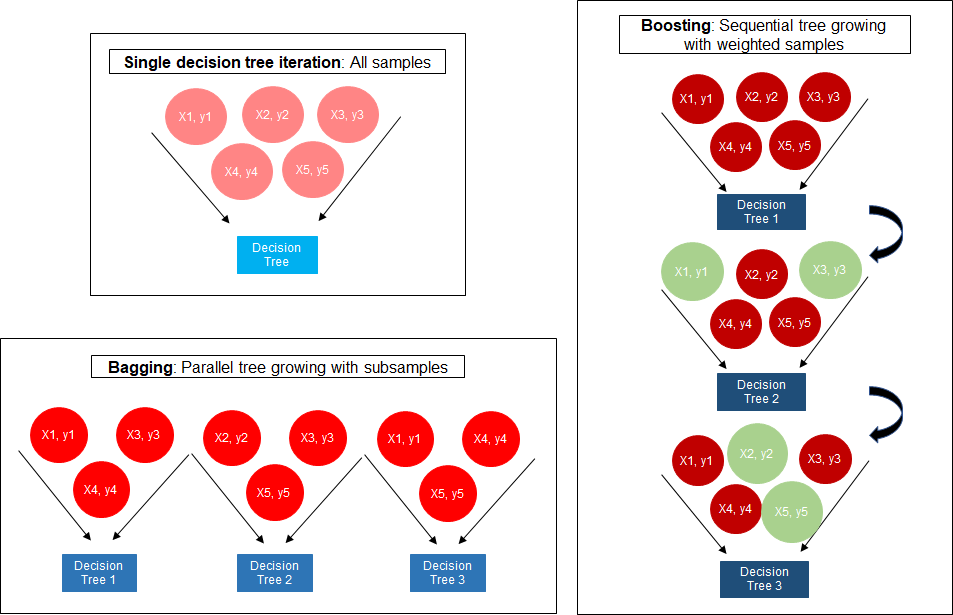

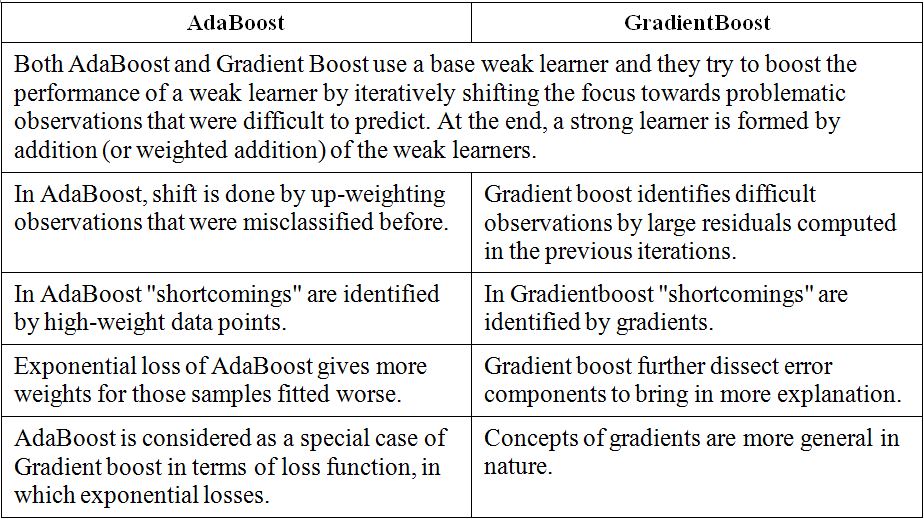

Difference between different tree-based techniques. If you come from Deep Learning community then it should be clear to you that there are differences between the neural. Difference between Gradient Boosting and Adaptive BoostingAdaBoost.

A problem with gradient boosted decision trees is that they are quick to learn and overfit training data. Extreme Gradient Boosting XGBoost XGBoost is one of the most popular variants of gradient boosting. Management Information System MIS consists of following three pillars.

Gradient boosting algorithms can be a Regressor predicting continuous target variables or a Classifier predicting categorical target variables. Now calculate the similarity score Similarity ScoreSS SR 2 N ƛ Here SR is the sum of. XGBoost is basically designed to enhance the performance and speed of.

Decision Support System DSS is an interactive flexible computer based information system or sub-system intended to help decision makers use communication technologies data documents to identify and solve problems complete decision process tasks and make decision. The model consisting of trees and.

Exploring Xg Boost Extreme Gradient Boosting From The Genesis

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

The Structure Of Random Forest 2 Extreme Gradient Boosting The Download Scientific Diagram

Catboost Vs Light Gbm Vs Xgboost By Alvira Swalin Towards Data Science

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

Comparison Between Adaboosting Versus Gradient Boosting Statistics For Machine Learning

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Xgboost Algorithm Long May She Reign By Vishal Morde Towards Data Science

0 comments

Post a Comment